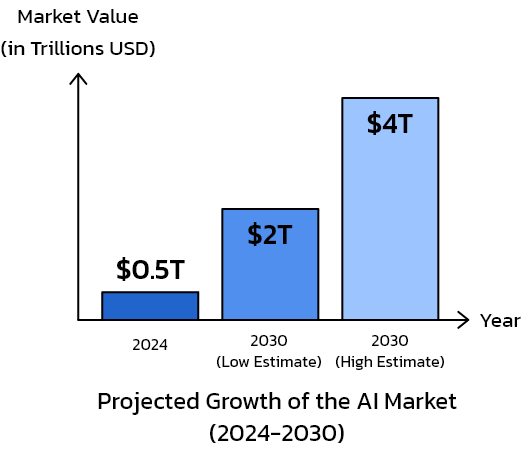

The world is being transformed by artificial intelligence (AI). Its market value exceeded $500 billion in 2024 and will reach $2 to $4 trillion by 2030, a pace that feels almost dizzying. AI is now a part of every aspect of our lives. And at the center of this technological revolution are data centers. The use of computer systems to simulate human intelligence is what AI is all about. These systems learn, reason, and self-correct.

AI is growing at an astonishing pace. Take, for instance, the case of ChatGPT. In just five days, it amassed a user base of a million, while it took Netflix several years to reach the same landmark. Such rapid ascendance in the uptake of technology gives a strong impression that AI is ready for, and driving toward, full-scale implementation. AI flourishes on data, and the more, the merrier—not to mention how computing power is also thrown into the mix as a basic demand. ChatGPT probably isn’t even in the same universe as Netflix when it comes to the sheer storage space needed for all that data.

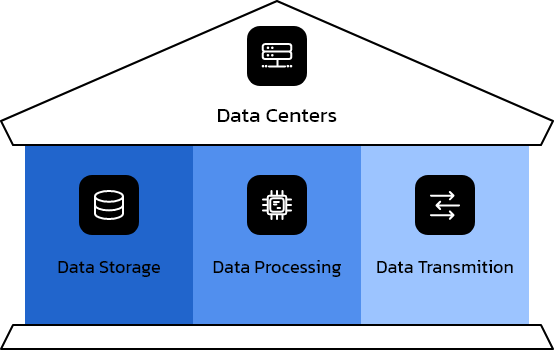

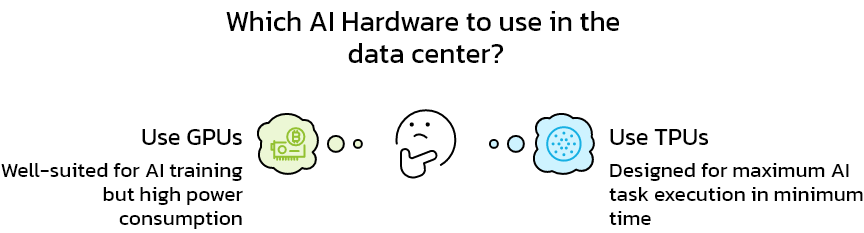

Rows of servers, storage systems, and intricate networks of information flow are housed in data centres. These powerhouses of computation and memory are needed for such diverse and essential tasks as handling search queries, performing financial transactions, and enabling digital interactions. They function primarily in the background, and society is almost entirely unaware of their presence or the scale at which they operate. Compatibility between the infrastructure of these digital facilities and artificial intelligence is vital when it comes to carrying out the three most important types of operations for AI. They are key tasks that the most common classes of processors—the graphics processing unit, or GPU; the central processing unit, or CPU; and the tensor processing unit, or TPU—perform as they work with vast amounts of data.

Graphics processing units (GPUs) are superb parallel processors, which makes them well-suited for the training of artificial intelligence (AI) models. The central processing unit (CPU)—if one thinks of it as a highly scaled-up GPU—can handle an increasing number of simultaneous tasks, but it does so with somewhat less oomph per task. Google’s Tensor Processing Unit (TPU) is designed to execute a maximum number of AI tasks in minimum time.

When you put artificial intelligence into data centers, it creates a number of problems. First, there’s the matter of power. AIPS, or artificially intelligent processes, demand HPC, that’s high-performance computing, and an AI system has to be supplied with reliable and redundant power. Otherwise, you’re asking for trouble. So, AIPS have to be thought of as kind of parallel tasks—”AIPS run in parallel in what’s called a ‘power partition.'” And you’re looking at a power demand, at the very least, on the order of half a gigawatt. When Facebook installed its data center in Sweden, it was the first time a company had made that much power available in the pipeline.

The rapid development of artificial intelligence demands an equally swift response from regulators. The EU AI Act, for instance, places AI systems into four risk categories—unacceptable, high, limited, and minimal or no risk. This is the basis for compliance that industry will have to build on if it wants to avoid any of the hefty fines that the Act prescribes for noncompliance.

Simultaneously, the NIS2 Directive is now extending the range of digital regulation in an all-encompassing way. “Unacceptable” and “high-risk” AI systems come under an enlarged umbrella of digital security regulations, just as data centers and part of the physical infrastructure of the internet itself are being covered in the lengthy, sometimes bewildering, narrative of compliance.

The rapid evolution of artificial intelligence demands an immediate response from the infrastructure that undergirds our digital lives, and none is more critical today than adaptations within our data centers.

Complex algorithms need complex computations, and a growing reliance on AI will only further inflate the already-burgeoning demand for processing power. Complying with the new regime of directives and regulatory statutes that govern “revolutionized” AI will bring additional pressures to bear on our data center architecture.

The story of artificial intelligence and data centers is one of ongoing progress and constant reinforcement.